Does ChatGPT-Generated Text Hurt Your SEO?

No. For now.

Instead of focusing on how content is created, there is a better way to look at the issue of AI-generated content.

- What is the purpose of the content? Is it the main focus or a sidenote?

- How much effort did you put in to creating it, and does it match the expectation of the reader?

- How much generated content is there in total?

- Did you bother to read the content before using it?

- Did you bother to read this before creating it?

There are times when AI-generated content is perfectly fine to use, although I believe there are more times when it isn't.

Content Effort

In its guidance on Google search and AI content, Google says:

Google's ranking systems aim to reward original, high-quality content that demonstrates qualities of what we call E-E-A-T: expertise, experience, authoritativeness, and trustworthiness.

An LLM has no experience, no expertise, no authority, and its answers can't be trusted to be factual.

So by definition, it doesn't meet the quality threshold unless there is a human involved in editing and adjusting it.

Then the article says (emphasis mine):

Our focus on the quality of content, rather than how content is produced, is a useful guide that has helped us deliver reliable, high quality results to users for years.

In other words, crap content is out, generated or not.

(And, yeah, I know. Google's very good at ranking crap content. I think we need to take these notes as a sign of where it wants to go, not where it is today.)

We can also look at the Quality Raters Guidelines. And for that, I will switch to talking about the level of effort because that's one way Google measures quality.

It says:

The quality of the [main content] can be determined by the amount of effort, originality, and talent or skill that went into the creation of the content.

Under 'effort' it says:

Consider the extent to which a human being actively worked to create satisfying content.

And in section 4.6.6:

The Lowest rating applies if all or almost all of the [main content] on the page... is copied, paraphrased, embedded, auto or AI generated, or reposted from other sources with little to no effort, little to no originality, and little to no added value for visitors to the website.

I could go on. But I think we know what Google is saying: there is a fine line between LLMs being useful and harmful, and that's the line it's trying to describe.

Is ChatGPT-Generated Content "Helpful"?

At the risk of bringing everyone out in hives, let's think about "helpful content".

In some cases, content generated by an LLM it can be helpful. Let's think about:

- Ecommerce product pages

- Landing pages

- Data tables or charts

- Forms

- Technical documentation

- Page metadata

My view is that some of this content can be AI-generated.

For example:

- I don’t think there’s anything wrong with producing AI-generated content in a programmatic SEO project if it's done with good intentions. That's assuming the content is fairly short and informational, and it's not the "MC" - the "main content" on the page.

- If you need a description of a product, location, service, you can use a tool like GPT for Sheets to knock that out. The results need to be edited because they will always be wrong. But it'll save some time. And, to be honest, I've never met a writer who was looking forward to filling in 2,000 rows on a spreadsheet. I did it right at the start of my writing career, and I never want to do it again.

- Who reads meta descriptions? Nobody. Not even Google. If you want to generate them, I won't fight you on that.

- Alt text: same. I'll outsource it.

- Charts: I'm not a designer, so I'll use an LLM in a pinch.

All of this is secondary to writing.

So what shouldn't be generated?

- Anything you want humans to read, trust, enjoy, share, and learn from.

- Anything piece of content designed to sell something.

I would rather poke myself in the eye with a sharp stick than read an AI-generated blog post. So there's your line.

Is Google Able to Detect "Helpful" Content?

No, I don't think so.

The Helpful Content Update caused people to lose their jobs and livelihoods. Not only did Google seem to underestimate the HCU's impact, it seemed to be unable to get the situation into control, or even understand what went wrong.

In October 2024, it invited a small group of content creators to its HQ to discuss the HCU. This quote from Pandu Nayak says a lot:

“I suspect there is a lot of great content you guys are creating that we are not surfacing to our users, but I can't give you any guarantees unfortunately."

Danny Sullivan allegedly said:

"There’s nothing wrong with your sites, it’s us."

"Your content was not the issue."

Wait.

The Helpful Content Update destroyed the site, but the content wasn't the problem?

There's more context in this excellent blog post:

Google’s elderly Chief Search Scientist answered, without an ounce of pity or concern, that there would be updates but he didn’t know when they’d happen or what they’d do. Further questions on the subject were met with indifference as if he didn’t understand why we cared.

My interpretation is that high-quality, high-effort content should rank consistently, but it doesn't. The HCU overshot massively and Google hasn't pulled it back enough yet.

We were asked multiple times how to tell the difference between a spam site and a good site. We were asked outright what features Google should add that we feel would be helpful. Honestly, this wasn't an event for us to get answers - Google wanted our feedback.

— Kim Snaith (@ichangedmyname) October 30, 2024

What Google says it wants and what it appears to reward are not the same thing. That's the painful truth. And chasing what works is usually more attractive in the short term.

Long term, I think Google will keep adjusting during core updates to try to do a better job of aligning rankings with guidelines, which it is not doing yet.

Is Publishing ChatGPT-Generated Text Risky?

Yes, I believe so.

The days of lookalike affiliate marketing content are gone. Clients still want it, in some cases, so I'm sure we'll continue to produce it if we can be paid for it.

But I think good writers will move on to a much more satisfying type of content: high-quality content with EEAT.

Here's why I'm convinced.

1. Information Gain

Google says in black and white that it wants to see original content at the top of the SERPs.

LLMs produce unoriginal content.

Information goes in, information comes out in a different order. And not only that, but the information that is spat out is frequently wrong.

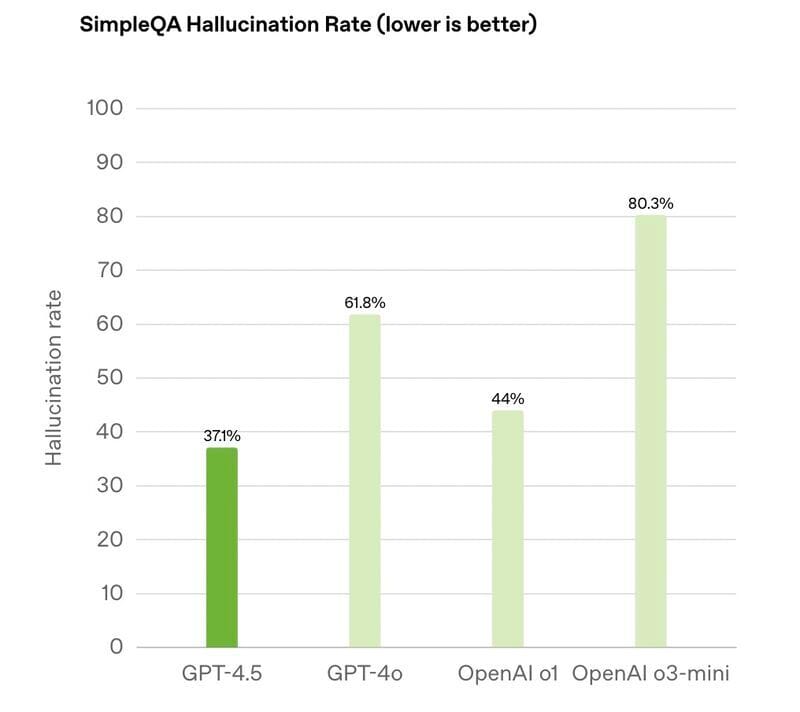

Let's pause to think how often LLMs are wrong. SimpleQA is designed to elicit hallucinations with questions that are much more difficult than typical prompts, but this is still an eye-opener:

And this is still true, in my experience:

Currently, the only way to reliably use LLMs is to know the answer to the question before you ask it.

So for good information that is actually accurate, you need a human involved in verifying information, expanding it, and improving quality. There is no way around it.

There should be something in every blog post that is new, surprising, innovative, or supported by unique personal experience.

2. The Tidal Wave Effect

Many companies use LLMs to produce much content as possible as quickly as possible. I have heard CEOs of large marketing companies boast about scraping People Also Ask questions and publishing 100 pages of content per day to rank for them.

The updated Quality Raters' Guidelines warn against this.

I already wrote about the fact that Google is bad at detecting slop in search results, even though the patterns are obvious to you and me.

It may get better at detecting it. I don't think it's too difficult to pick out the word patterns.

When it does... will the content be reclassified as scaled content abuse? Spam?

Going back to Google's guidance, it says:

Using automation—including AI—to generate content with the primary purpose of manipulating ranking in search results is a violation of our spam policies.

So, again, the days of cheap content might not last forever.

If it works now, I don't think it will for long.

3. The Reputational Damage

Osama wrote about a study that indicates that humans don’t mind AI-generated content until you tell them it’s AI.

What if they can already tell?

I pushed for a couple of years to have author bios added to a client site. Not because they're good for SEO. (At the time I started pushing for them, nobody cared.)

I wanted them because they build trust.

I want to know whose advice I'm following and why I should believe them.

Now think about what happens if you generate your author bio with ChatGPT. It instantly discredits the author and all the content under their name.

Ahh. The bio is BS, so all the articles are as well. Makes sense.

4. Semantic Search

Everything I've said so far assumes that traditional Google search results still exist in two years' time.

Now, Google is still the dominant player in search, so I think it's highly likely that organic results will disappear.

But the intent of someone searching Google vs searching ChatGPT might be totally different. The conversion rates might be different as well.

Semantic search is important for AI Overviews, LLM search, and AI Mode. So by incorporating semantic keywords, hiring good writers, and prioritising quality, you'll have a better chance of future-proofing against the changes that are coming.

Augment With AI, but Don't Replace

I use LLMs for various tasks in my day-to-day work. I'm OK with using LLMs for small content tasks, productivity, and reducing the load on writers.

I draw the line at ChatGPT-generated blog posts. I draw the line at low effort content. And I believe that publishing it is a poor investment.