Patterns Will Save Us In The End

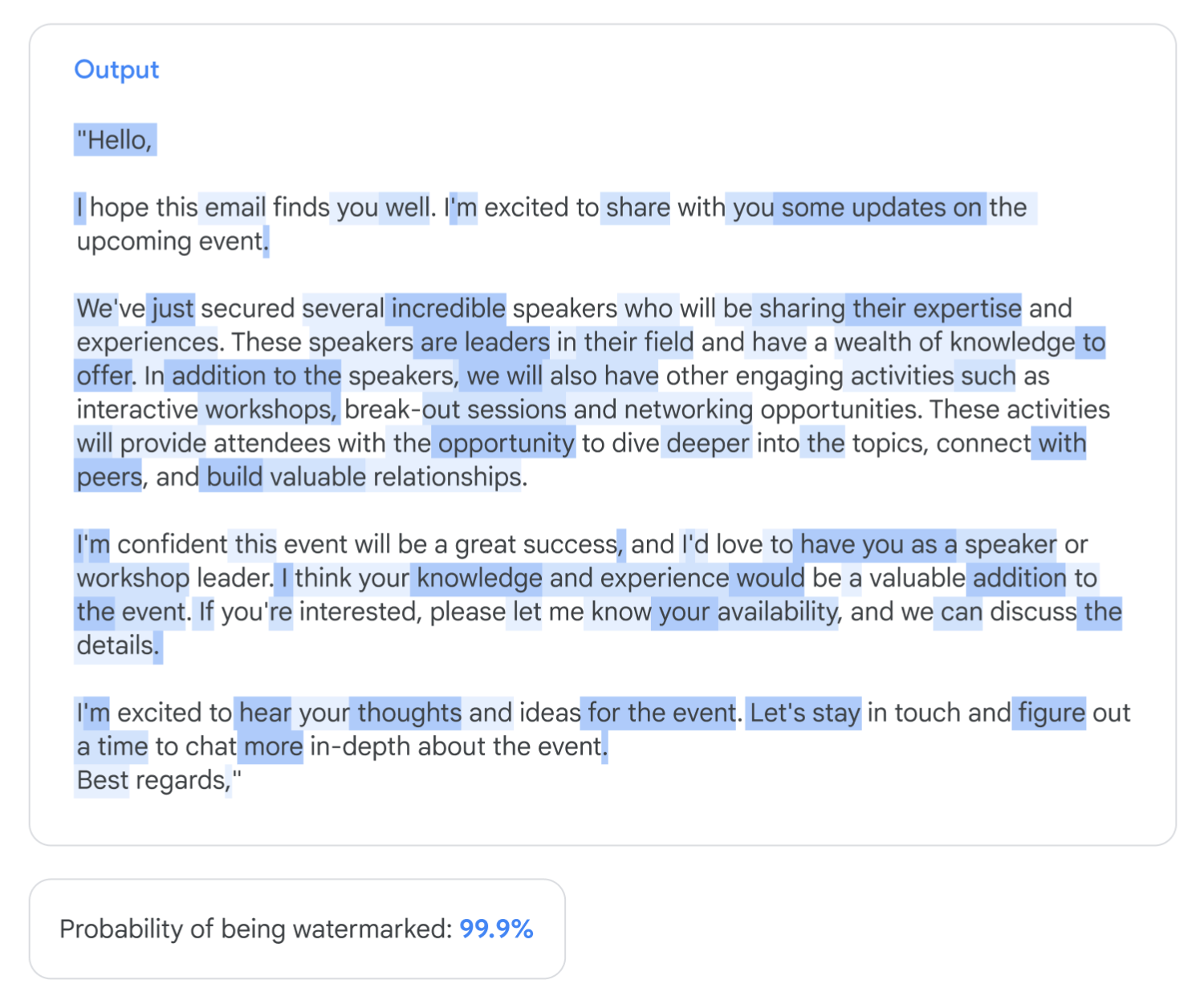

Google can detect AI-generated content using a system called SynthID.

It does this by adding patterns to the text it generates.

SynthID can then detect those patterns, allowing it to flag AI-generated content.

SynthID isn't just for Gemini. It has been open source since October 2024, and you can try it on Hugging Face.

Right now, I don't think Google can detect AI-generated content in the SERPs.

But I think SynthID gives us clues as to how it might do that in the future.

And as human readers, I think patterns allow us to 'detect' AI-generated content on our own. Automating this pattern recognition would be the next logical step.

Repeat, Repeat

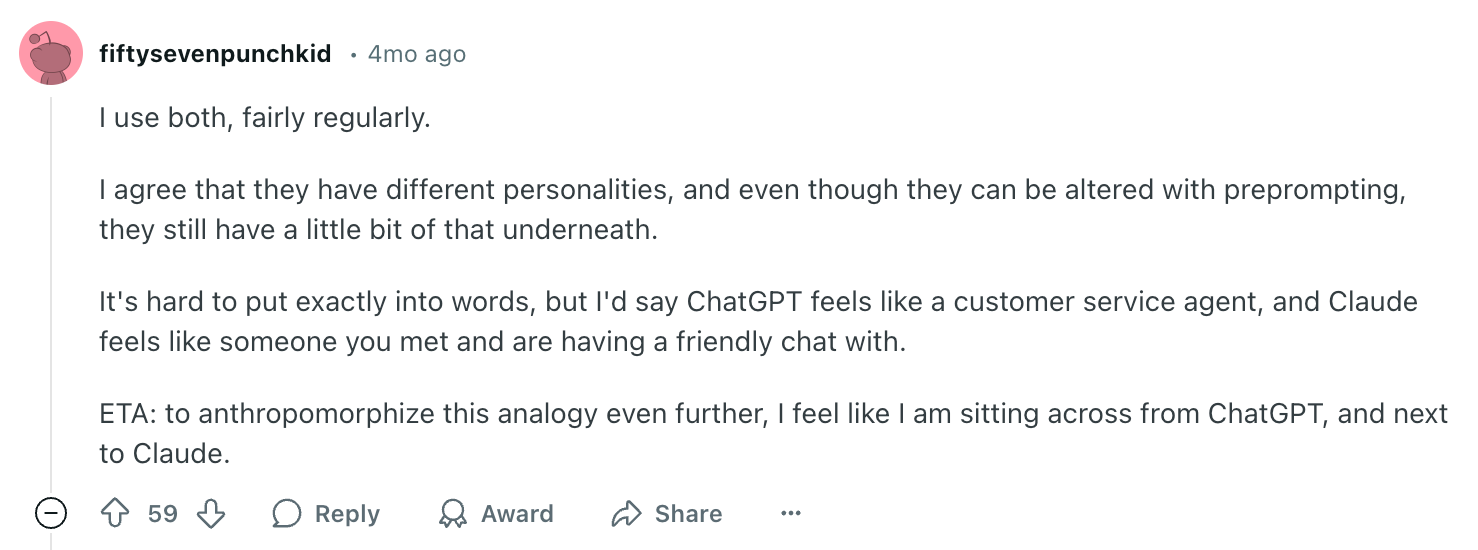

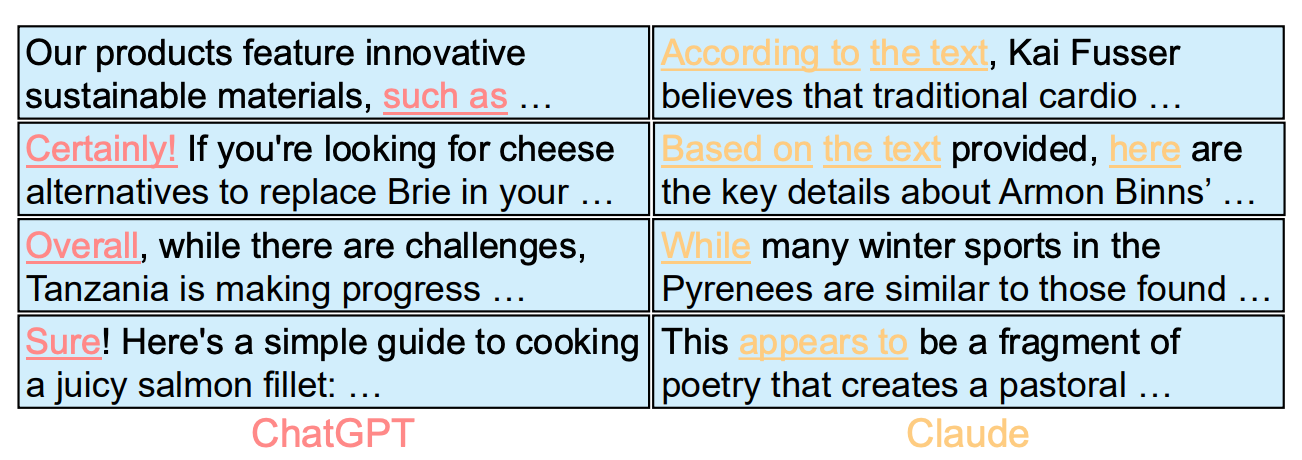

Do you have a preference for a particular LLM based on the way it formats its responses?

(When Claude 3.5 Sonnet was replaced with 3.5 New, I could 'feel' the change before Anthropic announced it.)

Next example. Maybe you find some quirks of LLMs annoying?

(Ugh, thanks.)

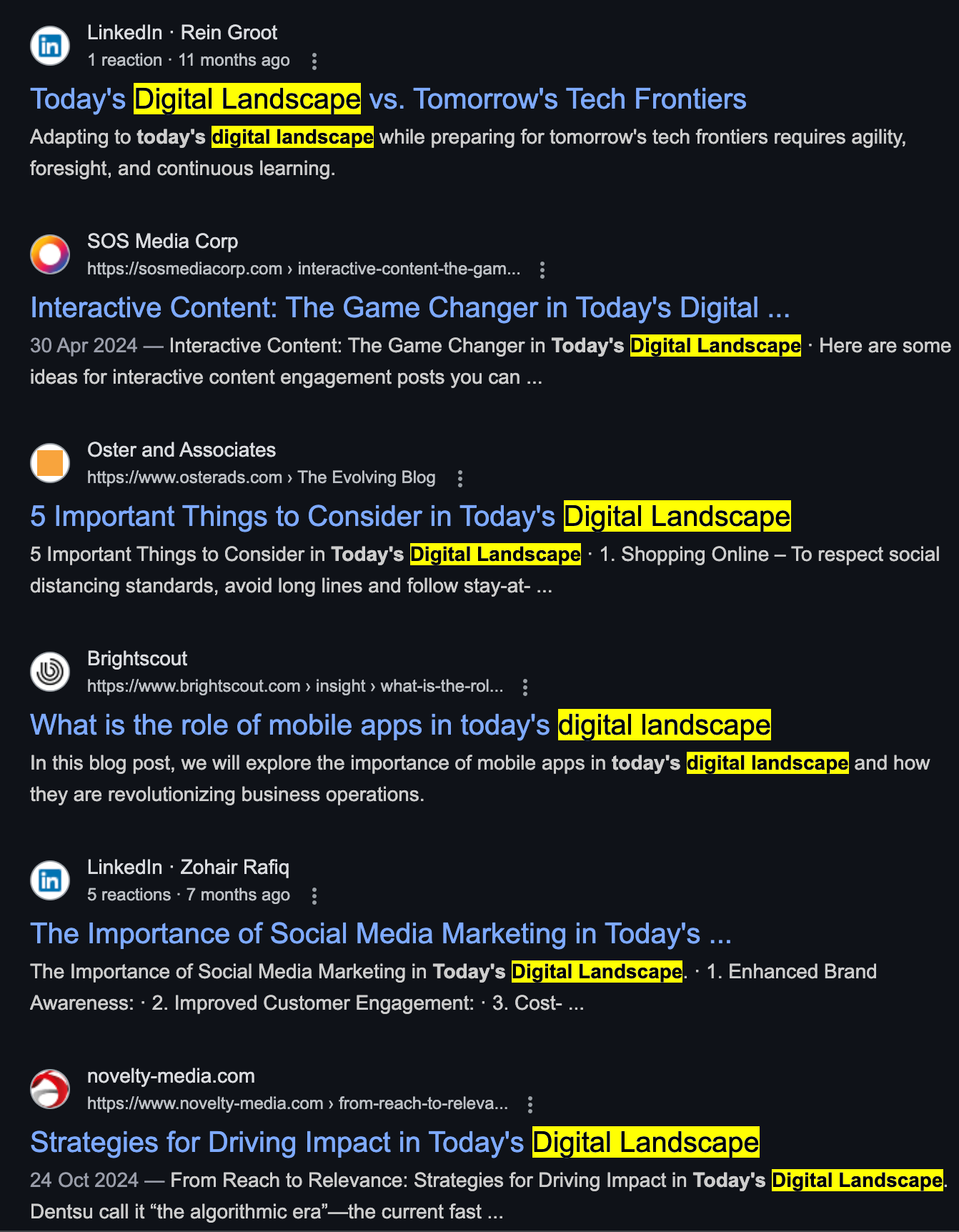

Researchers found that there are clear patterns in the way LLMs respond. And it's possible to guess which LLM was used based on the patterns alone.

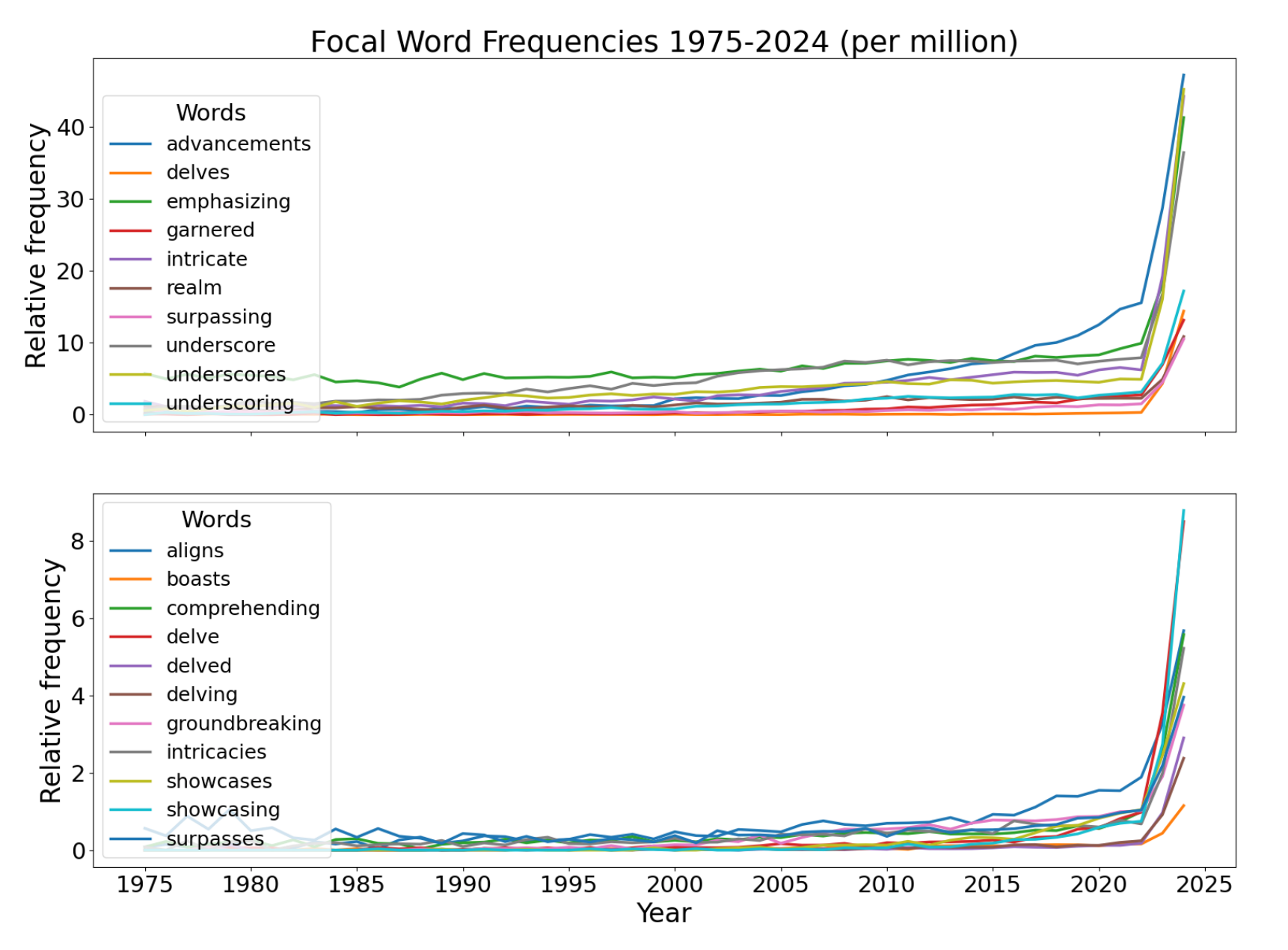

"Idiosyncrasies in Large Language Models" is not the first research paper to explore this. Here's another one, appropriately titled 'Why Does ChatGPT "Delve" So Much?'

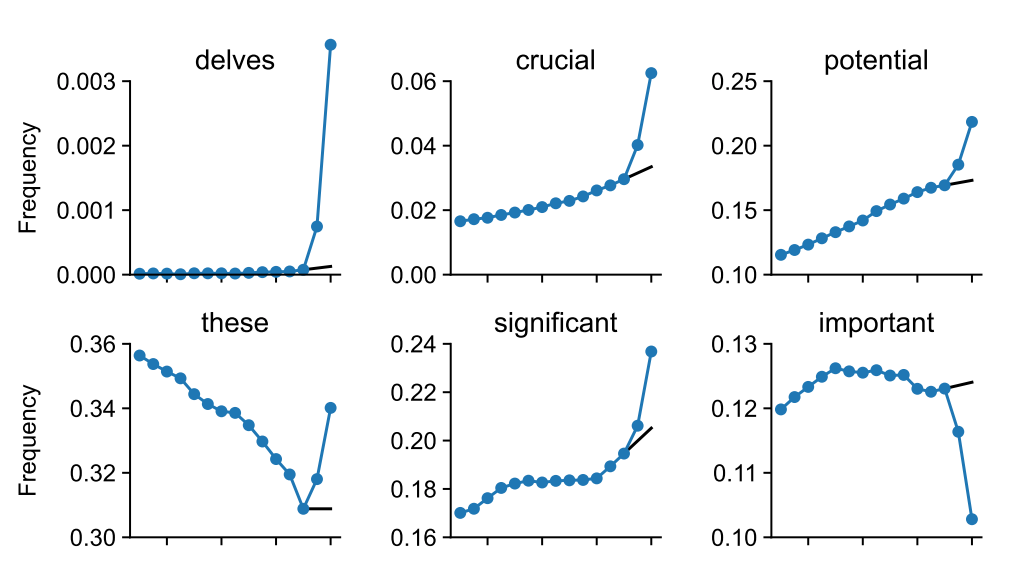

Here's another one that talks about "excess vocabulary". This is focused on the overuse of some words like "delve", "crucial", "showcasing", and "underscores".

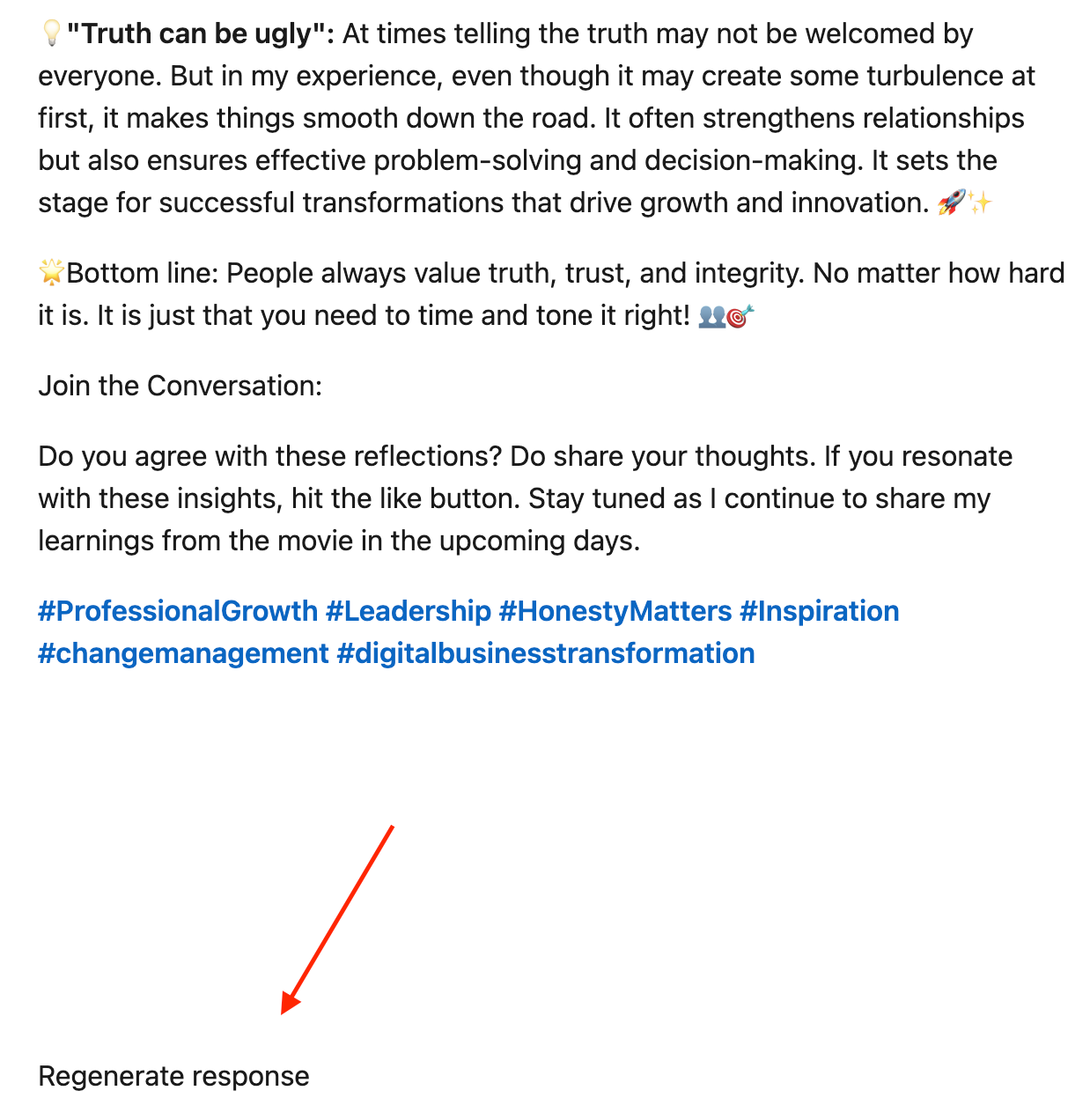

And I bet you have spotted slop in the wild.

You just need to know what to look for.

Then you see it everywhere.

Google could detect this now. I daresay it wouldn't even need SynthID.

We just need it to be better at picking up on the patterns that are already there.

Time to EEAT

If you think it doesn’t matter to Google whether content is AI-generated or not, I have two responses.

- Google says it wants to reward "original, high-quality" content. By definition, content generated by a large language model cannot be original.

- When does AI-generated content become spam?

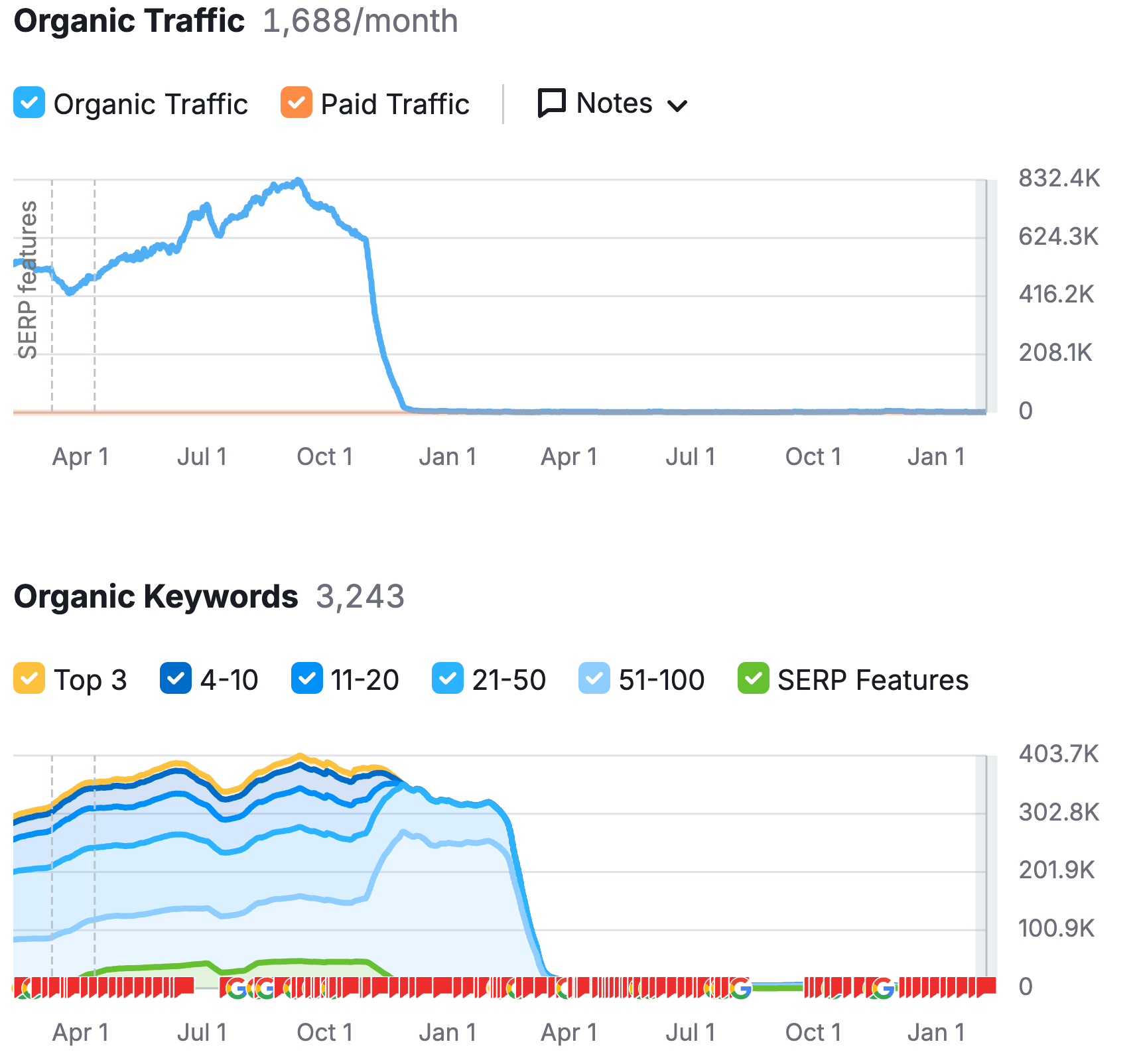

A few folks have skirted a little too close to the sun while trying to find out.

I feel that the push for EEAT is the single best thing that has happened in content production in my entire 15-year career.

Good writers write in their own voice, and from their own experience. This is all stuff they are naturally good at, and want to do.

But I think it would be naive to think that Google is pushing EEAT solely because it wants to make the internet a better place. (Although I'm sure there are people working for Google who genuinely want that.)

Google also needs fresh content to prevent misinformation.

To make features like AI Overviews better (and goodness, they need to be better), Google needs a fresh supply of human-made content. It makes sense that it also needs to separate the human-written stuff from the slop.

Otherwise, the snake eats its own tail. New LLMs have to train on slop, and the inaccuracies are exaggerated further.

Sure, AI-generated content can meet the definition of being "helpful" in some cases. But that's not a terribly high bar to meet.

Human Content Will Make a Comeback

I believe relying on AI to generate content at scale will bite many businesses on the backside.

It's just a matter of time.

Many writers and editors are struggling to find work right now. I believe that we will see a resurgence in demand once the spam stops ranking.

And it will, once these patterns are detected at scale.

I'm not against LLMs in content production. I use Claude almost every day for processing data and, ironically, detecting patterns in text. It's a tool, like Google Sheets. I'm supportive of people who want to use it.

Does that mean I think an LLM can replace your content writer?

Well, put it this way: would you fire your data analyst because you bought a calculator?

A bunch of freelance writers, editors, and SEO professionals got together to create Human Made. We're here to prove that human-written content is content that people actually want to read. And we're here for the resurgence.